Comic-Guided Speech Synthesis

Yujia Wang1 Wenguan Wang1,2 Wei Liang1 Lap-Fai Yu3

1Beijing Institute of Technology 2Inception Institute of Artificial Intelligence 3George Mason University

Abstract

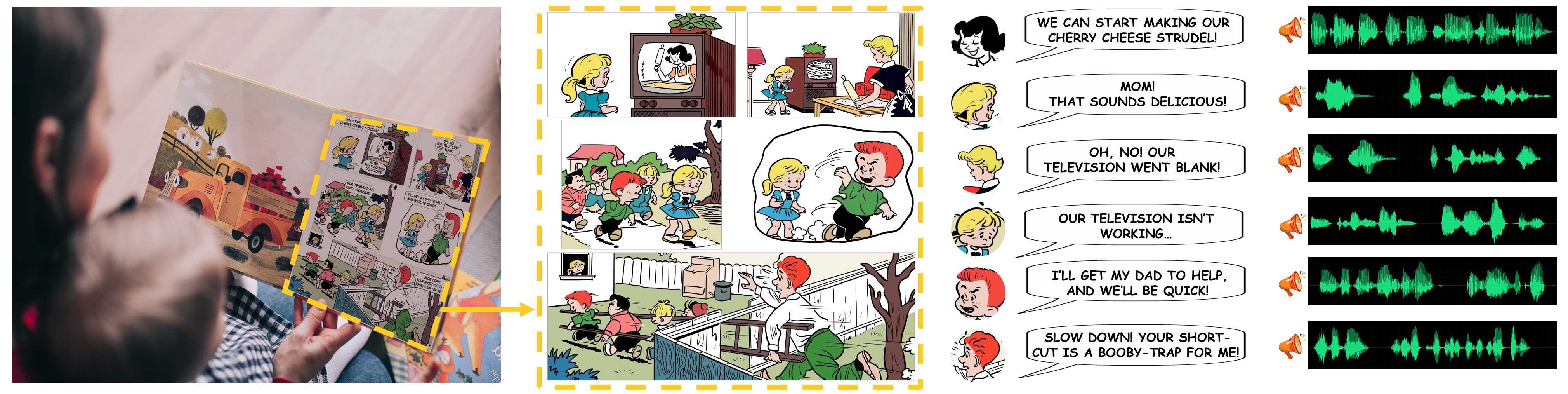

We introduce a novel approach for synthesizing realistic speeches for comics. Using a comic page as input, our approach synthesizes speeches for each comic character following the reading flow. It adopts a cascading strategy to synthesize speeches in two stages: Comic Visual Analysis and Comic Speech Synthesis. In the first stage, the input comic page is analyzed to identify the gender and age of the characters, as well as texts each character speaks and corresponding emotion. Guided by this analysis, in the second stage, our approach synthesizes realistic speeches for each character, which are consistent with the visual observations. Our experiments show that the proposed approach can synthesize realistic and lively speeches for different types of comics. Perceptual studies performed on the synthesis results of multiple sample comics validate the efficacy of our approach.

Keywords

Comics, Speech Synthesis, Deep Learning.

Publication

Comic-Guided Speech Synthesis

Yujia Wang,

Wenguan Wang,

Wei Liang,

Lap-Fai Yu

ACM Transactions on Graphics (Proceeding of SIGGRAPH Asia 2019)

Paper

Supplementary

, Video

, Speech Results (Web Virsion)

, Dataset (Coming Soon)

BibTex

@article{comic2019wang,

title=

{Comic-Guided Speech Synthesis},

author = {Wang, Yujia and Wenguan, Wang and Liang, Wei and Yu, Lap-Fai},

journal={ACM Transactions on Graphics},

volume = {38},

number = {6},

year = {2019}

}

- 媒体计算与智能系统实验室

- Media Computing and Intelligent Systems Lab

Beijing Institute of Technology Copyright Address: 5 South Zhongguancun

Street, Haidian District, Beijing Postcode: 100081